Blog Post

5 min read

Expectations are High for Advanced Anomaly Detection

Recently Gartner released their latest Hype Cycle report for Data Science and Machine Learning, advising Data and analytics leaders to use this report to better understand the data science and machine learning landscape and evaluate their current capabilities and technology adoption prospects. The report states that "to succeed with data and analytics initiatives, enterprises must develop a holistic view of critical technology capabilities."

One of the technologies noted that is 'On the Rise' side of the curve is Advanced Anomaly Detection.

Advanced Anomaly Detection is Accelerating

Expecting Advanced Anomaly Detection to accelerate to mainstream over the next ten years, the Gartner report explained that "Anomaly detection is relevant in situations where the existence, nature and extent of disruptions cannot be predicted. Systems that use advanced anomaly detection are more effective than those that use simpler techniques. They can detect subtle anomalies that might otherwise escape notice, provide earlier warning of impending problems or more time to capitalize on emerging opportunities, reduce the human effort required to develop a monitoring or measuring application, and reduce the time to solution for implementing complicated anomaly detection systems."

In the report, Gartner senior analyst Peter Krensky and Gartner VP and distinguished analyst W. Roy Schulte provide their insight for how Advanced Anomaly Detection will impact companies, and the benefits to be gained. They say that “virtually every company has some aspects of operations for which it is important to distinguish between routine conditions and matters that require extra attention. Much of advanced anomaly detection is a competitive advantage today, but we expect most of the current technology driving it will be widespread and even taken for granted within 10 years because of its broad applicability and benefits. Advanced anomaly detection is already being embedded in many enterprise applications.”

Forces Driving the Need for Advanced Anomaly Detection

We find the main drivers that are creating the need for Advanced Anomaly Detection solutions come as businesses are having to manage increasing amounts of data and tracking more metrics. An unavoidable consequence of the scale and speed of today’s business, especially when lines of code sets are changed in seconds, are costly glitches.

Moreover, as many processes happen simultaneously, many businesses monitor activities by a different person or team. Anomalies in one area often affect performance in other areas, but it is difficult for the association to be made when departments operate independently of one another.

With businesses constantly generating new data, they need a solution that can analyze metrics on a holistic level and give people the insights they need to know what is going on.

Applying Advanced Anomaly Detection

Looking at how Advanced Anomaly Detection has already been applied, the analysts note that "its use is increasing in applications as diverse as enterprise security, unified monitoring and log analysis, application and network performance monitoring, business activity monitoring (including business process monitoring), Internet of Things predictive equipment maintenance, supply chain management, and corporate performance."

High Business Impact of Advanced Anomaly Detection

Advanced Anomaly Detection received a maturity level of ‘Emerging’, meaning that it is expected to grow and evolve.

The strong momentum that Advanced Anomaly Detection has gained has drawn the attention of innovative vendors. The report lists some sample vendors who offer Advanced Anomaly Detection solutions, such as Anodot.

Our Take on the Gartner Hype Cycle on Data Science and Machine Learning

Each year, Gartner produces Hype Cycle reports to provide a graphic representation of the maturity and adoption of technologies and applications, and how they are potentially relevant to solving real business problems and exploit new opportunities.

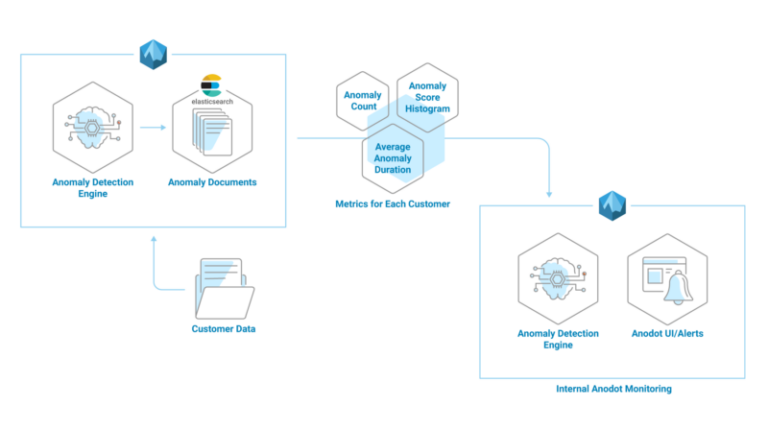

In our case, the challenge with big data is how to find the right insight, or really what are the right business incidents in the data. While Gartner outlines a clear need and drive for Advanced Anomaly Detection for data driven businesses, we believe that mainstream adoption will appear faster than projected in the report, because businesses that implement it will enjoy clear competitive advantage, driving competitors in their segment to move quickly to add Advanced Anomaly Detection capabilities. There are many systems that try to find events and data that is not normal. Yet, often these systems fail, identifying too many anomalies (false positives) or not enough (false negatives). Applying machine learning to many rapidly changing environments means constantly updating and retraining models, to prevent false positives.

Our AI analytics solution offers autonomous analytics. Instead of having to ask the many questions and carry out complex data analysis, Anodot does the work, and provides the answers to understand why an incident happened.

Next Step

Click here to download our white paper that helps you explore and weigh the issues around the Build or Buy Dilemma for Advanced Anomaly Detection.

All statements in this report attributable to Gartner represent Anodot’s interpretation of data, research opinion or viewpoints published as part of a syndicated subscription service by Gartner, Inc., and have not been reviewed by Gartner. Each Gartner publication speaks as of its original publication date (and not as of the date of this report). The opinions expressed in Gartner publications are not representations of fact, and are subject to change without notice.

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner's research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

Gartner, Hype Cycle for Data Science and Machine Learning, 2017, 28 July 2017

Read more