Videos & Podcasts

0 min read

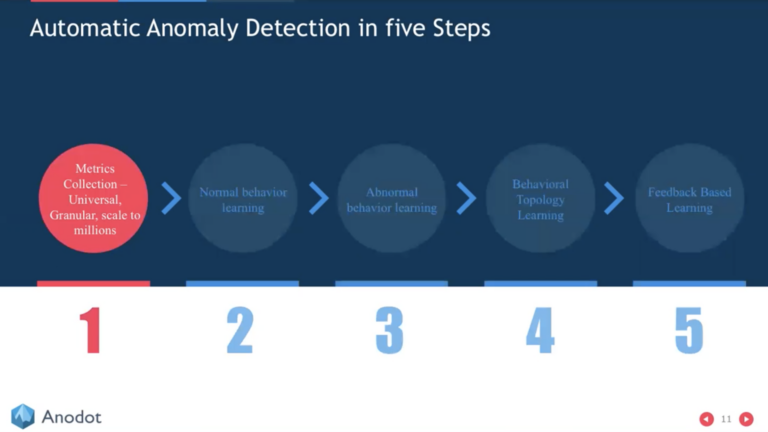

Webinar: Real-Time Anomaly Detection & Analytics for Digital Business

Watch to learn how predictive anomaly detection can detect business incidents in real time, and hear how companies are using it to prevent revenue loss.

Watch