If you happen to be running multiple clusters, each with a large number of services, you’ll find that it’s rather impractical to use static alerts, such as “number of pods < X” or “ingress requests > Y”, or to simply measure the number of HTTP errors. Values fluctuate for every region, data center, cluster, etc.

It’s difficult to manually adjust alerts and, when not done properly, you either get way too many false-positives or you could miss a key event.

For effective monitoring, it’s critical that your system automatically understands the severity of an incident and whether it has the potential to become catastrophic. Decisions such as when to wake up at night, when to scale out, or when to back pressure must be done automatically.

If you’re already using Kubernetes, you’ve clearly made a commitment to digital transformation. You’re probably using the auto-healing mechanism to resolve issues. So it hardly makes sense to be manually setting alerts, a key process in your AIOps workflow.

It’s analogous to having an autonomous car without the built-in navigation system. It wouldn’t make sense.

Given our experience running Kubernetes in large-scale production environments, we’d like to share our guidelines for getting the most efficient alerting process. By adopting an AI monitoring system, your organization can use machine learning to constantly track millions of events in real time and to alert you when needed. This will enable your team to refocus their efforts on mission-critical tasks.

So whether you’ve already implemented AI monitoring, or you’re thinking about doing so, here are our five best practices for managing autonomous alerts:

1. Track the API Gateway for Microservices in Order to Automatically Detect Application Issues

Although granular resource metrics such as CPU, load and memory are important to identify Kubernetes microservice issues, it’s hard to use these convoluted metrics. In order to quickly understand when a microservice has issues, there are no better KPIs than API metrics such as call error, request rate and latency. These will immediately point to a degradation in some component within the specific service.

The easiest way to learn service-level metrics is by automatically detecting anomalies on REST API requests on the service’s load balancer, ideally over Ingress Controller such as Nginx or Istio. These metrics are agnostic to actual service and can easily measure all Kubernetes services in the same way. Alerts can be set at any level of the REST API (or any API), including customer-specific behavior in a multi-tenant SaaS.

Here is an example of an increase in request count to one of the microservices that was detected automatically.

An example of a latency drop for one of the services, identified automatically:

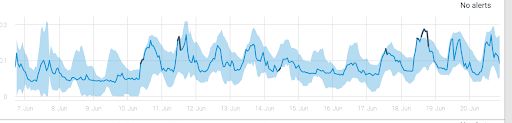

Look at how the baseline changes in the graph above. If there had been a static threshold here, the user would have had to manually adjust for this new state, or baseline.

There are several reasons rule-based alerts (aka static alerts) would not work here. With hundreds of different APIs, each with their own behavioral patterns, there is no feasible way to maintain a static alert for each of them. AI monitoring automatically adjusts to the new state baseline, which reduces quite a bit of maintenance.

Now, if you’re more interested in dramatic changes – sure, anyone can identify when metrics drop to zero, but by that point, you’re just trying to put out the fire. With the scale and granularity of AI analytics, pattern changes are detected far before they drop to zero. And the ability to adapt to fluctuating patterns reduces false-positives and the ensuing maintenance, while also shortening time to detection.

2. Stop Measuring Individual Containers

Due to the dynamic nature of Kubernetes resources, and the assumption that replicas in Deployment are symmetric, it has become very noisy to monitor container resources individually.

Metrics change on a daily or hourly basis within the short life cycle. For example, ReplicaSets metrics change with every deployment as a new ReplicaSet ID is generated. Typically, we want to learn about pattern changes on the entire set of containers. For that we use cAdvisor, which can provide container-based metrics on CPU, memory and network usage.

Key metrics include:

From the above, it’s clear that a change in the average ‘max memory usage’ for a given container set indicates a need to fix the default limits and requests for memory (see example below).

The anomalies in the graph below show an increase in average memory usage by some set of containers in a specific cluster. In this case, the Etcd cluster:

In the next example, the system learns the Kubernetes Deployment CPU usage and understands what constitutes normal behavior. The baseline in this graph indicates the metric’s normal behavior, learned over time. Establishing this baseline ensures an alert isn’t fired each and every time a CPU peaks. The system recognizes the dark blue peaks in the previous graph as less significant anomalies and those marked in orange as critical.

3. Get to the Heart of Potential Problems by Tracking “Status” or “Reason” Dimensions

Kube-State-Metrics is a nice add-on that keeps tabs on the API server and generates Kubernetes resource metrics. Typically the ones that merit alerts are related to the Pod’s lifecycle and services state.

Take, for example, a container state metric that includes the property “reason”:”OOMKilled”. This error may occur occasionally due to HW issues or rare events, but will usually not require immediate attention. But by following “status” and “reason” metrics over time, you can really start to understand if these “hiccups” are actually trends. In that case, you’ll likely need to adjust the allocated Limits and Requests to alleviate memory pressure.

As for issues related to services, the number of replicas per service usually depends on the scale of events (in an auto-scaled environment) and the roll-out strategy. It is important to track unavailable replicas and make sure that issues are not persistent.

4. Always Alert on High Disk Usage!

High disk usage (HDU) is by far the most common issue for every system. There is no magic for getting around this or automatic healing for StatefulSet resources and statically attached volumes. The HDU alert will always require attention, and usually indicates an application issue. Make sure to monitor ALL disk volumes, including the root file system. Kubernetes Node Exporter provides a nice metric for tracking devices:

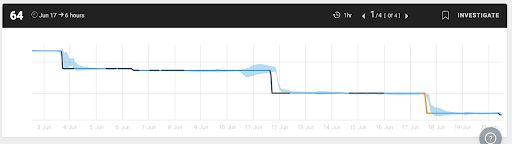

Usually, you will set an alert for 75-80 percent utilization. Nevertheless, identifying pattern changes earlier can reduce your headaches. The chart below shows real disk utilization over time and triggers anomaly alerts on meaningful drops.

5. Finally, We Cannot Ignore Kube-System

By far the most complex issues stem from the actual Kubernetes System. Issues typically will occur due to DNS bottlenecks, network overload, and, the sum of all fears – Etcd. It is critical to track degradation of master nodes and identify issues before they happen, particularly load average, memory and disk size. To make sure that we do not crash the clusters, we need to monitor specific kube-system patterns, in addition to the aggregated resource pattern detection mentioned in tip #2.

Most of the components on kube-system provide metrics on “/metrics” resource, that can be automatically scraped directly. We recommend tracking application-level metrics for each kube-system component, such as:

Conclusion

If you are already managing Kubernetes you are on the right track with your organization’s progress towards IT automation. Day to day, it enables you to self-remediate, scale on demand and automate the microservices lifecycle. But from the business perspective, Kubernetes can help you reduce operational costs and increase the efficiency of your engineering teams.

In this post, we recommend the adoption of automatic monitoring instead of manually managing alerts. Our vision at Anodot is that AI monitoring and anomaly detection will become a standard tool for managing large application clusters in complex environments. AI monitoring will allow you to run your application with minimum downtime, consequently helping safeguard your business from loss.