Most customers running Kubernetes clusters Amazon EKS are regularly looking for ways to better understand and control their costs.

While EKS simplifies Kubernetes operations tasks, customers also want to understand the cost drivers for containerized applications running on EKS and best practices for controlling costs.

Anodot has collaborated with Amazon Web Services (AWS) to address these needs and share best practices on optimizing Amazon EKS costs.

You can read the full post here on the AWS website.

Amazon EKS pricing model

The Amazon EKS pricing model contains two major components: customers pay $0.10 per hour for each configured cluster, and pay for the AWS resources (compute and storage) that are created within each cluster to run Kubernetes worker nodes.

While this pricing model appears straightforward, the reality is more complex, as the number of worker nodes may change depending on how the workload is scaled.

Understanding the cost impact of each Kubernetes component

Kubernetes costs within Amazon EKS are driving by the following components:

- Clusters: When a customer deploys an AWS EKS cluster, AWS creates, manages, and scales the control plane nodes. Features like Managed Node Groups can be used to create worker nodes for the clusters.

- Nodes: Nodes are the actual Amazon EC2 instances that pods run on. Node resources are divided into resources needed to run the operating system; resources need to run the Kubernetes agents; resources reserved for the eviction threshold, and resources available for your pods to run containers.

- Pods: A pod is a group of one or ore containers and are the smallest deployable units you can create and manage in Kubernetes.

How Resource Requests Impact Kubernetes Costs

Pod resource requests are the primary driver of the number of EC2 instances needed to support clusters. Customers specify resource requests and limited for vCPU and memory when pods are configured.

When a pod is deployed on a node, the requested resources are allocated and become unavailable to other pods deployed on the same node. Once a node’s resources are fully allocated, a cluster autoscaling tool will spin up a new node to host additional pods. Incompletely configuring resource specifications can impact the cost within your cluster.

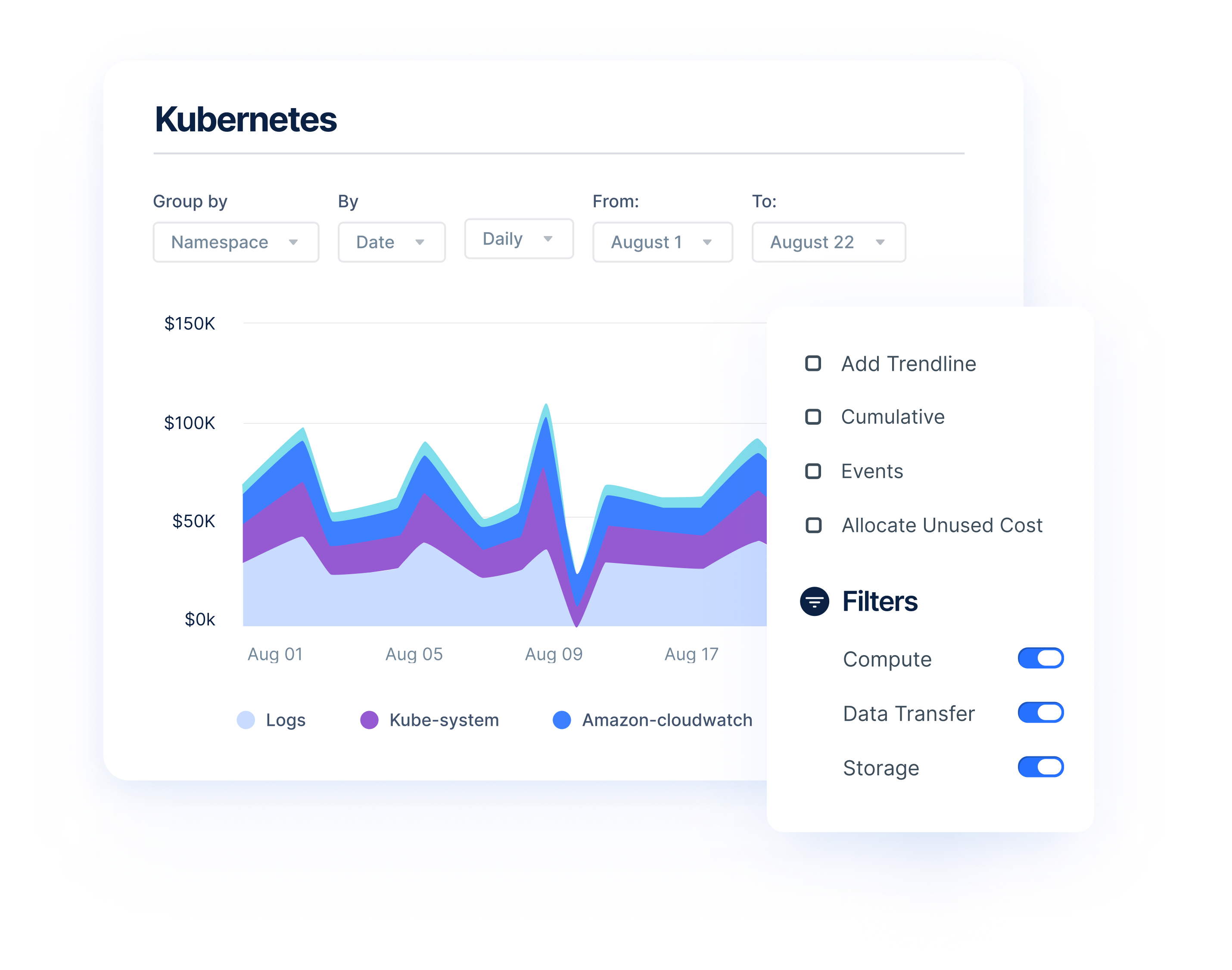

Tying Kubernetes Investment to Business Value with Anodot

Anodot’s cloud cost management platform monitors cloud metrics together with revenue and business metrics, so users can understand the true unit economics of customers, applications, teams and more. With Anodot, FinOps stakeholder from finance and DevOps can optimize their cloud investments to drive strategic initiatives.

Anodot correlates metrics collected with data from the AWS Cost and Usage Report, AWS pricing, and other sources. This correlation provides insight into pod resource utilization, nodes utilization and waste. It also provides visibility into the cost of each application that is run.

Start Reducing Cloud Costs Today!

Connect with one of our cloud cost management specialists to learn how Anodot can help your organization control costs, optimize resources and reduce cloud waste.