AWS DynamoDB is a fully managed NoSQL database provided by Amazon Web Services. It is a fast and flexible database service which has been built for scale.

What are the features of DynamoDB?

Some features of DynamoDB are:

Flexible Schema: DynamoDB is a NoSQL database. It provides a flexible schema which supports both document and key-value data models. Therefore each row can have any number of columns at any point in time.

Scalability: Amazon DynamoDB is highly scalable. It has impeccable horizontal scaling capabilities that can handle more than 10 trillion requests per day.

Performance: DynamoDB provides high throughput and low latency performance, which results in a millisecond response time for database operations and can manage up to 20 million requests per second.

Security: DynamoDB encrypts the data at rest and supports encryption in transit. Its encryption capabilities along with the IAM capabilities of AWS provide state-of-the-art security.

Availability: AWS DynamoDB provides guaranteed reliability and industry-standard availability with a Service Level Agreement of 99.999% availability.

Backup and Restoration: DynamoDB provides automatic backup and restoration capabilities and supports emergency database rollback.

Cost Optimization: Amazon DynamoDB is a fully managed database that scales up and down automatically depending on your requirements.

Integration with AWS Ecosystem: AWS DynamoDB provides seamless integration with other AWS services that can be used for data analytics, extracting insights and monitoring the system.

DynamoDB — Best Practices to maximize scale and performance

Provisioned Capacity: Increase the floor of your autoscaling provision capacity beforehand to what you expect your peak traffic would be in scenarios where you are expecting a huge surge of traffic such as during the Black Friday Sale, Prime Day or Super Bowl. You can drop it down to the normal provision capacity when the high-traffic event is over. This ensures that the burst bucket capacity and adaptive scaling kick in and everything runs smoothly even with a massive surge in traffic.

Availability: If you want 5 nines availability (99.999%) in DynamoDB then enable Global Tables in your DynamoDB service which provides you with multi-region data replication. Providing 5 nines availability in this scenario is an SLA guarantee from AWS. A single region DynamoDB setup only provides 4 nines availability.

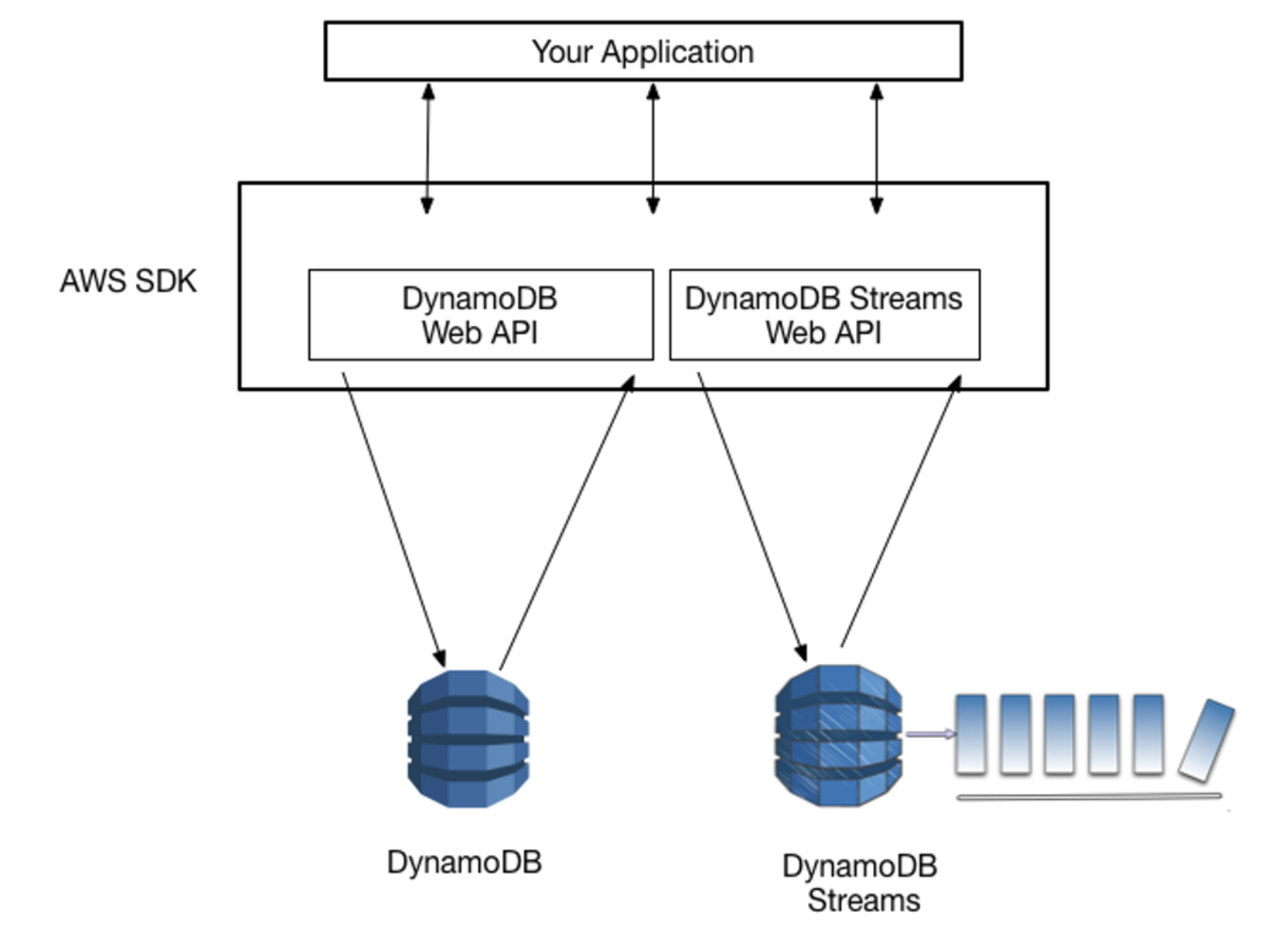

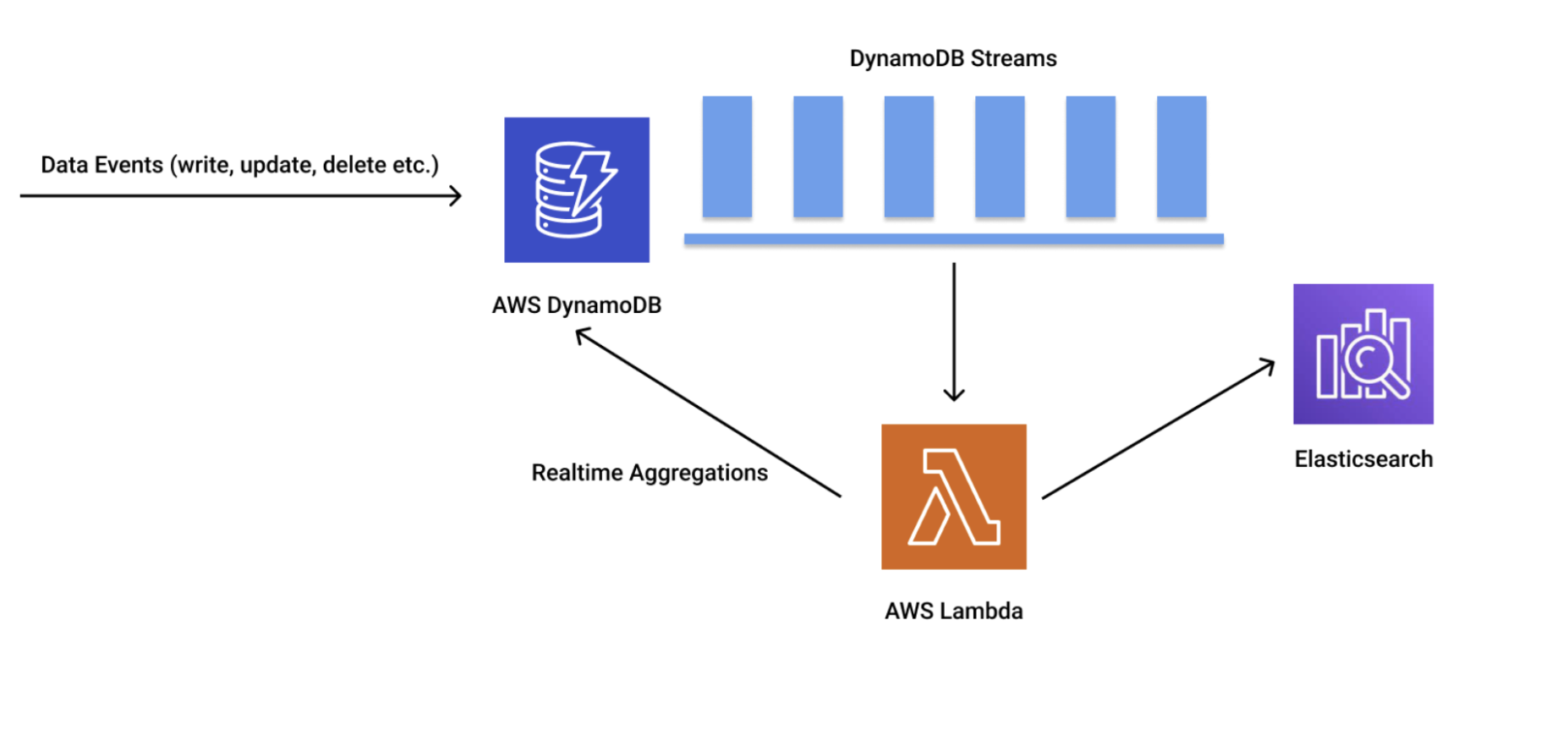

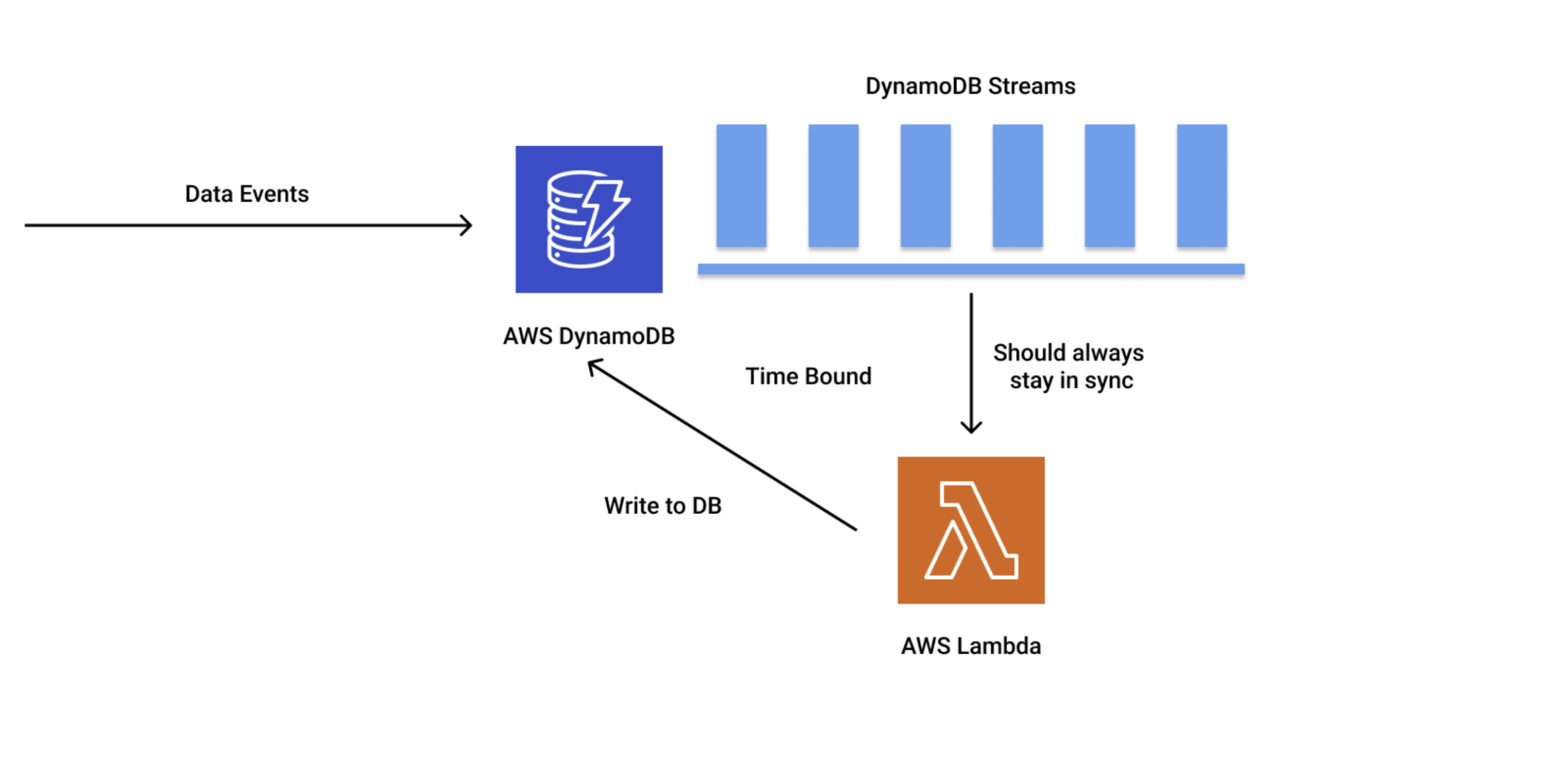

Handling Aggregation Queries: Aggregation queries are complicated to deal with in a NoSQL scenario. DynamoDB streams can be used in sync with Lambda functions to compute this data in advance and write to an item in a table. This preserves valuable resources and the user is able to retrieve data instantly. This method can be used for all types of data change events like writes, updates, deletes etc. The data change event hits a DynamoDB stream which in turn triggers a Lambda function that computes the result.

Serverless Computing Lambda Execution Timing: DynamoDB works along with AWS’s native Lambda functions to provide a serverless infrastructure. However, we need to keep in mind that the iterator age in a Lambda function is relatively low and manageable. If it is increasing, it should be in bursts and not via a steady increasing activity, if the Lambda function is too heavy and the work being done inside it is very time-consuming, it will result in your Lambda function falling behind your DynamoDB streams. This will cause the database to run out of stream buffer which eventually results in data loss at the edge of the streams.

Global Secondary Indexes: GSIs can be used for cost optimization in scenarios where an application needs to perform many queries using a variety of different attributes as query criteria. Queries can be issued against these secondary indexes instead of running a full table scan. This approach results in drastic cost reduction.

Provisioned throughput considerations for GSIs: In order to avoid potential throttling, the provisioned write capacity for a GSI should be equal to or greater than the write capacity of the base table. This is due to the fact that updates in the database would need to be written in both the base table and the Global Secondary Index.

Provisioned Capacity with Auto Scaling: Generally, you should use provisioned when you have the bandwidth to understand your traffic patterns and are comfortable changing the capacity via the API. Auto Scaling should only be used in the following scenarios:

- When the traffic is predictable and steady

- When you can slowly ramp up batch/bulk loading jobs

- When pre-defined jobs can be scheduled where capacity can be pre-owned.

Using DynamoDB Accelerator (DAX): Amazon DAX is a fully managed, high-availability cache for Amazon DynamoDB which provides 10x performance improvement. DAX should be used in scenarios where you need low-latency reads. For instance, DAX can provide performance improvement from milliseconds to microseconds even in a system that is processing millions of requests per second.

Increasing Throughput: Implement read and write sharding for situations where you need to increase your throughput. The process of sharding involves splitting your data into multiple partitions and distributing the workload across them. Sharding is a very common and highly effective DBMS functionality.

Batching: In scenarios where it is possible to read or write multiple items at once consider using batch operations as it significantly reduces the number of requests made to the database thus optimizing the cost and performance. DynamoDB provides BatchWriteItem and BatchGetItem operations for implementing this strategy.

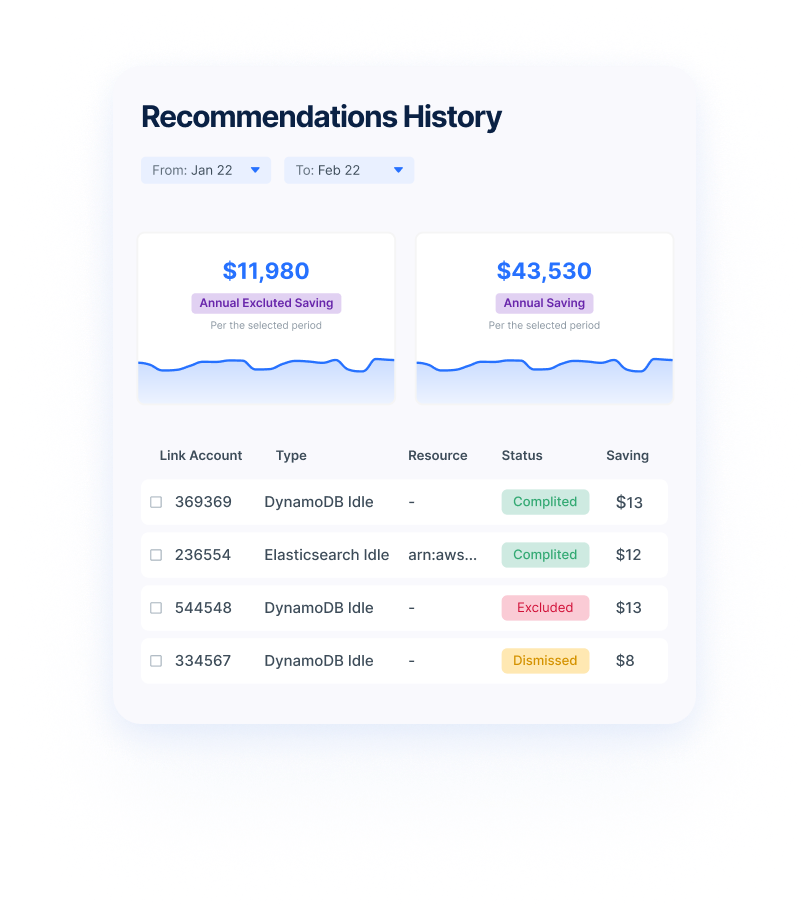

Monitoring and Optimization: It is a good practice to monitor and analyze your DynamoDB metrics. By doing this you can understand the performance of the system better and identify performance bottlenecks and optimize them. AWS DynamoDB provides seamless integration with AWS Cloud Watch, which is a monitoring and management system for AWS resources. Using this approach you can periodically optimize your queries by leveraging efficient access patterns. Monitoring the cost of DynamoDB is very important as it can directly impact your organization’s cloud budget. This is essential in order to esure that you are staying within the budget constraints and all the cost spikes are kept in check.

Anodot’s Cloud Cost Management capabilities can help you to effectively monitor the cost of your DynamoDB instances. Anodot provides you full visibility into your cloud environment which helps in visualization, optimization and monitoring your DynamoDB usage. The tools provided by Anodot help in ensuring that the DynamoDB instances are not idle and both your allocation and usage are in sync.

Periodic Schema Optimization: Periodically the database schema should be reviewed and optimized. The required access patterns for an application change over time and to maintain the efficiency of the system you should optimize your schema and access patterns, this includes — restructuring database tables, modifying indexes etc.

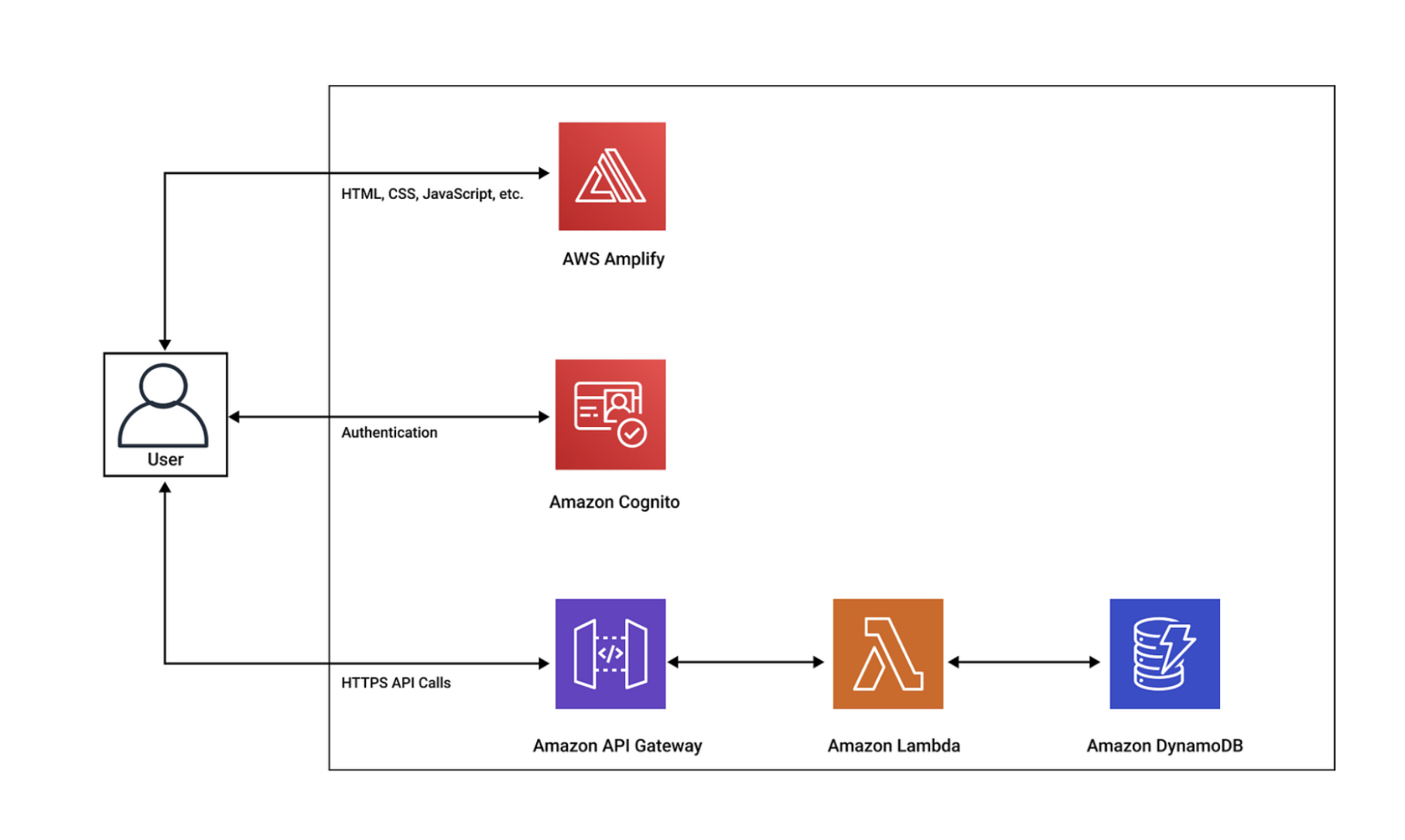

System diagram of DynamoDB being used in a serverless setup with AWS Lambda, Amplify and Cognito.

Start optimizing your cloud costs today!

Connect with one of our cloud cost management specialists to learn how Anodot can help your organization control costs, optimize resources and reduce cloud waste.