Kubernetes or K8s is an open-source production-grade container orchestration system for automating, scaling, and managing containerized applications. A container is a lightweight, standalone, executable ready-to-run software package that contains everything needed to run an application. It includes the runtime, code, libraries, systems tools, and default values for any essential settings. Managing, deploying, and scaling these containers becomes an extremely complicated and challenging task in real-world scenarios. This is where Kubernetes comes in and makes this entire process much simpler and streamlined.

Key components of K8s

The core components of Kubernetes are:

Node: a node is a worker machine in a Kubernetes cluster. An example of a node is a Virtual Machine instance

Pod: a pod is a single instance of a running process in a Kubernetes cluster

Deployment: a deployment is a controller that facilitates application deployment

Service: service is an abstract way to expose a Kubernetes deployment as a network service

Volume: volume is a directory that contains data which is accessed by the pods

Features of K8s

Some features of Kubernetes are:

Horizontal Scaling: Using Kubernetes you can scale your application up or down based on the system requirements or automatically based on the CPU usage.

Automated Rollouts and Rollbacks: Kubernetes provides the functionality of automated rollouts for your applications. It ensures that it doesn’t kill all your instances at the same time as your changes are rolled out. In case something goes wrong Kubernetes will roll back the changes for you.

Self-Healing: Kubernetes can automatically restart or replace the nodes or containers that fail. It can also kill the containers that do not respond to user-defined health checks.

Load Balancing and Service Discovery: Kubernetes provides pods with their own IP addresses and a single DNS name for a set of pods. This helps optimize load-balancing across pods.

Storage Orchestration: Kubernetes can automatically mount your desired storage system. It can be local storage, iSCSI or NFS network storage systems, or storage systems provided by popular cloud providers.

Dual-Stack: Kubernetes is dual-stack. It can allocate both IPv4 or IPv6 addresses to pods and services.

Batch Execution and Continuous Integration: K8s provide the functionality of managing batch and CI workloads with the capability of replacing failed containers.

Secret and Configuration Management: Kubernetes can deploy and update configuration and secrets without rebuilding the container image. It also ensures that the secrets in your stack configuration are not exposed.

Extensibility: Kubernetes allows custom functionalities to be added to the system without modifying its core binaries.

Monitoring and Logging: K8s has integration capabilities with major logging and monitoring services which provide useful insights into the system.

Kubernetes: Visibility and Optimization

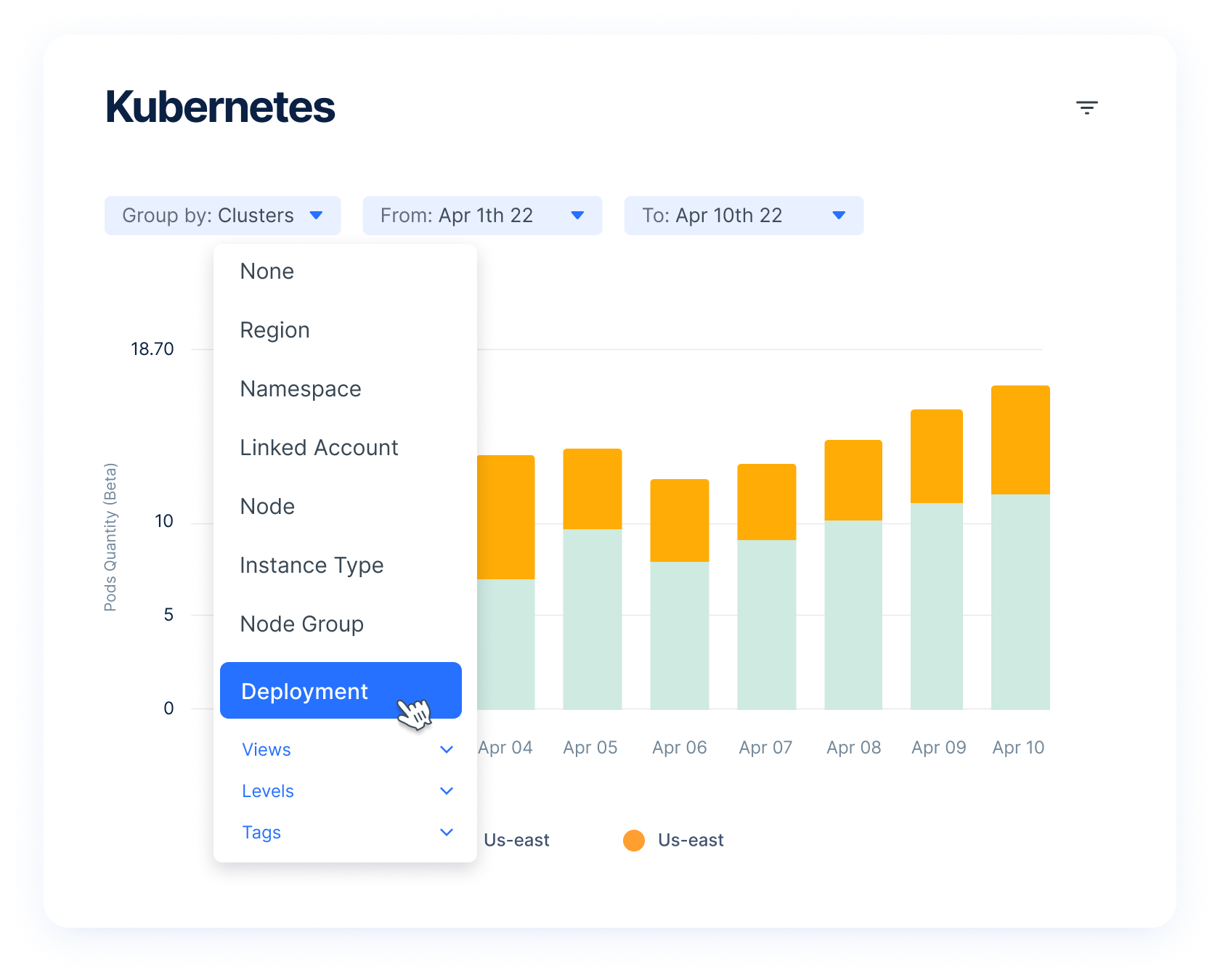

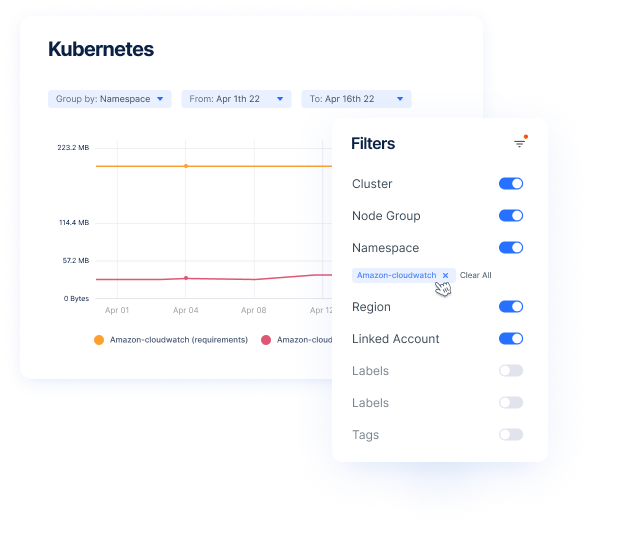

Per-pod visibility in a Kubernetes cluster is important for any organization that wants to debug pod-level issues, optimize deployments, monitor performance, and improve resource utilization. Using Anodot, FinOps teams can fine-tune Kubernetes resource allocation. This includes allocating the correct amount of resources per cluster, namespace, node, pod, and container. Anodot’s solutions provide comprehensive K8s visibility that you can use to continuously optimize your Kubernetes environment and hence your deployed applications.

Per-pod visibility and optimization can be achieved in the following ways:

CPU and Memory usage: It is crucial to analyze clusters and nodes to identify overprovisioned Kubernetes pods in terms of memory or CPU usage and optimize their resources accordingly.

Audit Logs: Kubernetes audit logs provide crucial logs of the system that are highly useful for debugging issues and analyzing the system.

Monitoring and Analysis: Prometheus is the industry standard monitoring tool for Kubernetes. It has the capability of extracting metrics from Kubernetes pods and derives meaningful insights from them. Prometheus is often used in sync with Grafana for visualization of the extracted metrics.

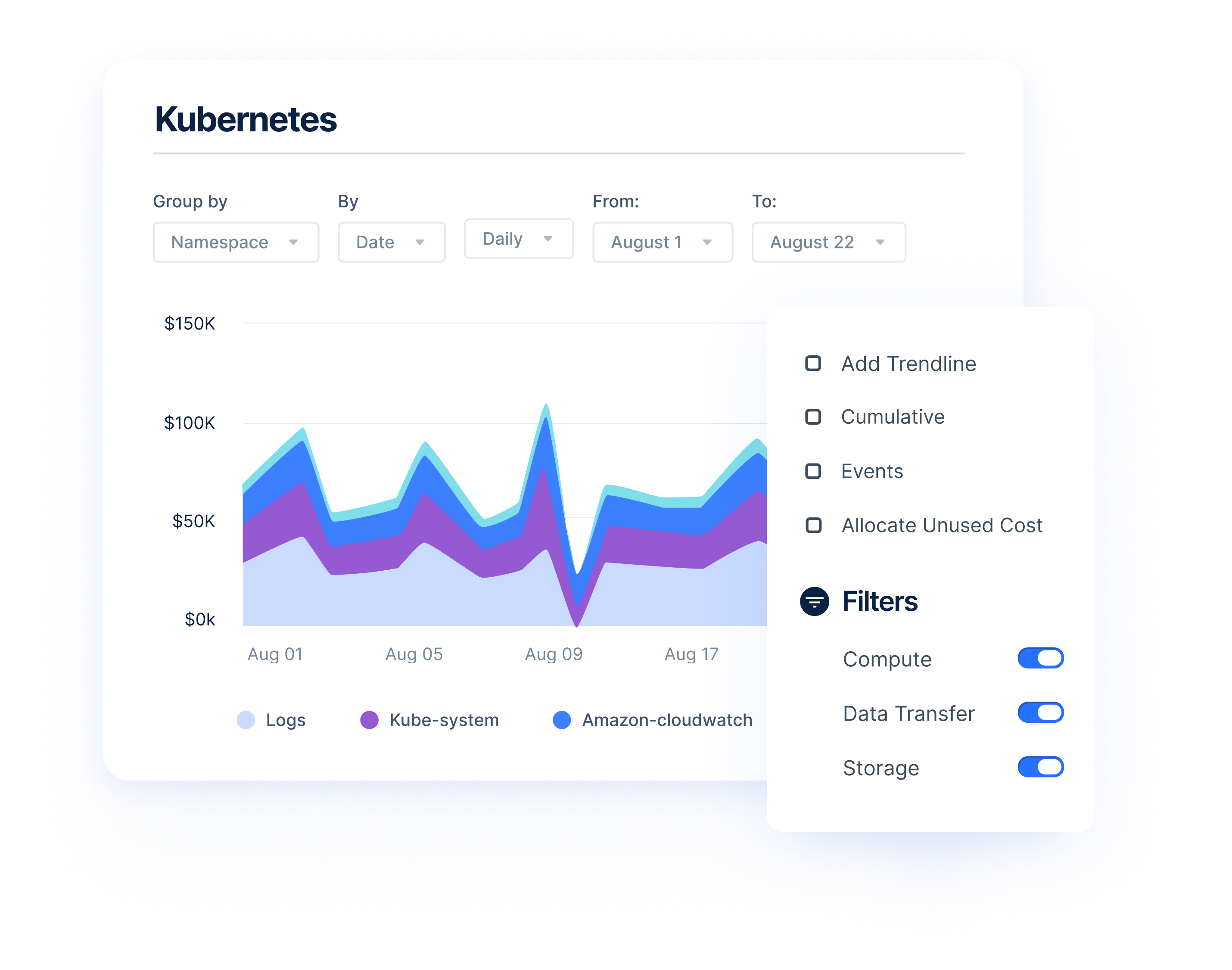

Accurate Cost Allocation: Kubernetes clusters are shared services with applications that can be run by several teams simultaneously. This means there’s no direct cost of a specific container. That’s why breaking costs down by compute, storage, data transfer, shared cluster costs, or waste can help get visibility into the structure of spend and pave the path to optimization.

Resource & Request Limits: It is a good practice to set proper request limits for CPU and memory for your Kubernetes pods. Setting up these resource and request limits helps the Kubernetes scheduler in better decision-making. This ensures that the pods are only using the resources they require and helps in avoiding issues such as resource contention.

Autoscaling: Kubernetes provides two types of Autoscalers. Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA). HPA is used to scale the number of pod replicas based on metrics such as CPU and memory usage whereas VPA adjusts the CPU and memory limits based on the usage.

It is often a good idea to use the services of companies such as Anodot to manage a highly scalable Kubernetes deployment. Anodot provides production-grade Kubernetes deployment services that are highly scalable and performant. With Anodot’s powerful algorithms and multi-dimensional filters, you can analyze your Kubernetes deployment’s cluster-level and pod-level performance in-depth and identify underutilization at the node and pod level.

Start optimizing your cloud costs today!

Connect with one of our cloud cost management specialists to learn how Anodot can help your organization control costs, optimize resources and reduce cloud waste.